This page features rotating highlights from projects involving ESC researchers:

This page features rotating highlights from projects involving ESC researchers:

Journalism scholars have increasingly become concerned with how our changing, increasingly digital, media environment has shifted traditional understandings of how news outlets create trust with audiences. In “Trust Signals: An Intersectional Approach to Understanding Women of Color’s News Trust,” Peterson-Salahuddin conducted focus groups with US women of color, a community marginalized minimally along race and gender, to understand how their positionality shapes how they conceptualize news trust in our contemporary digital media environment. Through eight focus groups with 45 women of color, she found participants had varying conceptualizations around antecedents of trust, such as accuracy and bias, as it pertained to traditional versus digital news media. Through these findings, Peterson-Salahuddin suggests how news organizations can better establish trust across marginalized communities.

In “Navigating the empty shell: the role of articulation work in platform structures”, Huber and co-author Casey Pierce explore teletherapy platform workers’ strategies for producing sustainable, quality services within platform structures that simultaneously over- and under-determine their work. These therapists describe navigating both the presence of platformic controls and the absence of features supporting professional best practices and regulatory requirements. Huber and Pierce describe this absence as the “empty shell” characteristic of platforms and argue that it is a central technique through which platforms create scale. Their findings detail the “articulation work” therapists employ— communicative strategies to navigate the empty shell and provide quality care to their clients. Attending to articulation work in an emerging platform labor context, such as teletherapy, contributes to our understanding of the politics of platforms.

In Trans Technologies (out 2025 from MIT Press), Haimson highlights the complexities that occur when technology design is deeply personal, the care practices that permeate trans technology creation, and the ways that trans people often turn to each other for support via technology when mainstream systems and technologies reject or exclude them. Haimson examines the world of trans technologies: apps, games, health resources, supplies, art, and other types of technology designed to help address some of the challenges transgender people face in the world. This book illuminates how technology helps us imagine new possibilities for trans people and communities, while at the same time, trans experiences help us imagine new possibilities for technology.

Big-DIG (Big Data, Innovation and Governance) works at the intersection of communication, information, and policy studies to generate data-driven knowledge on innovation diffusion, impact and governance in the world-system. Key investigations include digital rights activism after the Arab Spring and China’s social credit system. Our research involves the application of cutting-edge data science and field research techniques to the mapping of investments in, and diffusion of, big data-driven statecraft, mapping tech-policy norm diffusion in digital rights communities of practice, and mapping the impacts of public sector ICT infrastructure diffusion.

Emotion recognition algorithms recognize, infer, and harvest emotions using data sources such as social media behavior, streaming service use, voice, facial expressions, and biometrics in ways often opaque to the people providing these data. In this project we uncover how people conceive of their emotions and emotional data when they think about its harvesting by emotion recognition algorithms. We argue that emotional data is a type of data that warrants particular and explicit attention in research and practice.

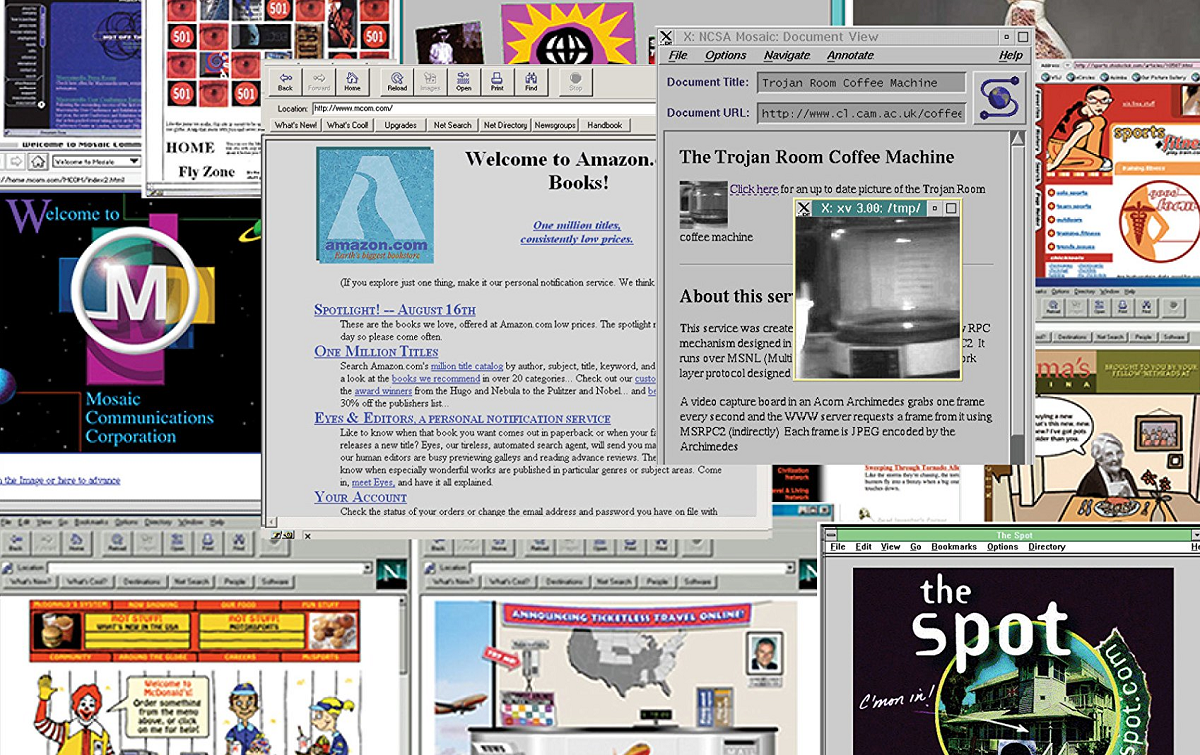

In the 1990s, the World Wide Web helped transform the Internet from the domain of computer scientists to a playground for mass audiences. The web changed the way many Americans experienced media, socialized, and interacted with brands. Businesses rushed online to set up corporate “home pages” and as a result, a new cultural industry was born: web design. For today’s internet users the early web may seem primitive, clunky, and graphically inferior. After the dot-com bubble burst in 2000, this pre-crash era was dubbed “Web 1.0,” a retronym meant to distinguish the early web. Dot-com Design provides a comprehensive look at the evolution of the web industry and into the vast internet we browse today.

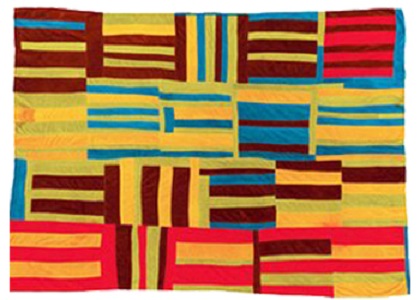

Many cultural designs show how math and computing ideas are embedded in indigenous traditions, graffiti art, and other surprising sources. These “heritage algorithms” can help students learn STEM principles as they simulate the original artifacts, and develop their own creations. By eliminating misconceptions about race and gender in STEM+C, engaging students, and working with teachers, CSDTs can simultaneously teach science, empower students and change perspectives.

The rise of unemployment and unstable, precarious work conditions sit in deep tension with growing bureaucratic and corporate interests in automating work across sectors. The question of who defines and understands the risks, impact, and benefits of this rapidly changing socio-technological landscape remains an open question. Scholars, policy makers, politicians, and media have responded with sharp critiques of digital labor platforms such as Uber and Amazon Mechanical Turk as they have furthered precarious conditions of work and life for minorities rather than brought about equal opportunity.

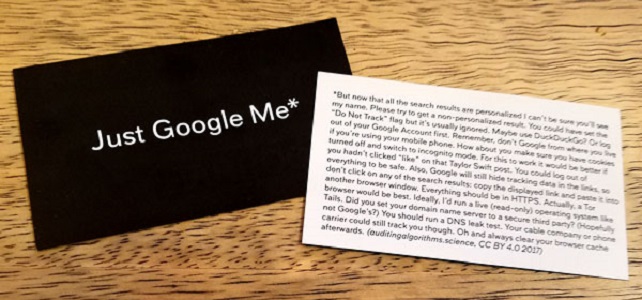

Secret, misfiring algorithms have recently been used to deny a college education to deserving students, to hide high-paying jobs from women, to send the innocent to jail, and to label people with racial slurs. These computational fiascoes are the result of new automated software-based decision systems in finance, media, information, transportation, and learning. This application of computing can easily create harmful outcomes that are unforeseeable by system designers. This research project advances new “algorithm auditing” techniques that have the potential to improve these systems by making their consequences visible from the outside. It includes a group of events designed to produce a white paper that will help to define and develop the emerging research community for algorithm auditing.